SEO in PWA – How to Optimize JavaScript in Progressive Web Apps

Are PWAs the new sexy of mobile tech? Yes, sir! Why? Well, for several reasons: in general, users are almost three...

Speaking of what is working, you’ve got to do a technical SEO review regularly. Basically, a Technical SEO audit is looking at your website through the eyes of search engines like Google and Bing.

There are millions of websites in the world today, and each one of these sites is competing for the top spot in SERPs. After all, the top spot translates to better visibility and lots of eyeballs. If everything is done right, you can convert these eyeballs to loyal paying customers.

To make it to the top spot, you need to perform a technical SEO audit regularly. Google’s algorithms change all the time, your site gets new features, too, and you make changes. You have to assume at the very beginning that SEO development for your business is a job that cannot be done just once. Continuous improvements are crucial. To do this effectively, you need to know what to optimize. And that’s what a technical SEO audit service is for.

In this article:

All good marketers want to improve the visibility and search engine ranking of their websites. To do that, they have to optimize the elements of their website for search engines, which exactly is called on-site SEO. On-site SEO is also known as on-page SEO optimization.

This is basically a set of technical tasks. It involves making sure your web pages, meta tags, content, and overall structure of your web page are optimized in regard to your target keywords. Simply put, on-site SEO optimization is things you do on your website to boost visibility. In contrast, off-site SEO is things you do outside the website to boost visibility and search engine ranking.

Now you understand the basics of on-site SEO optimization, here is what you need to know about technical SEO audit.

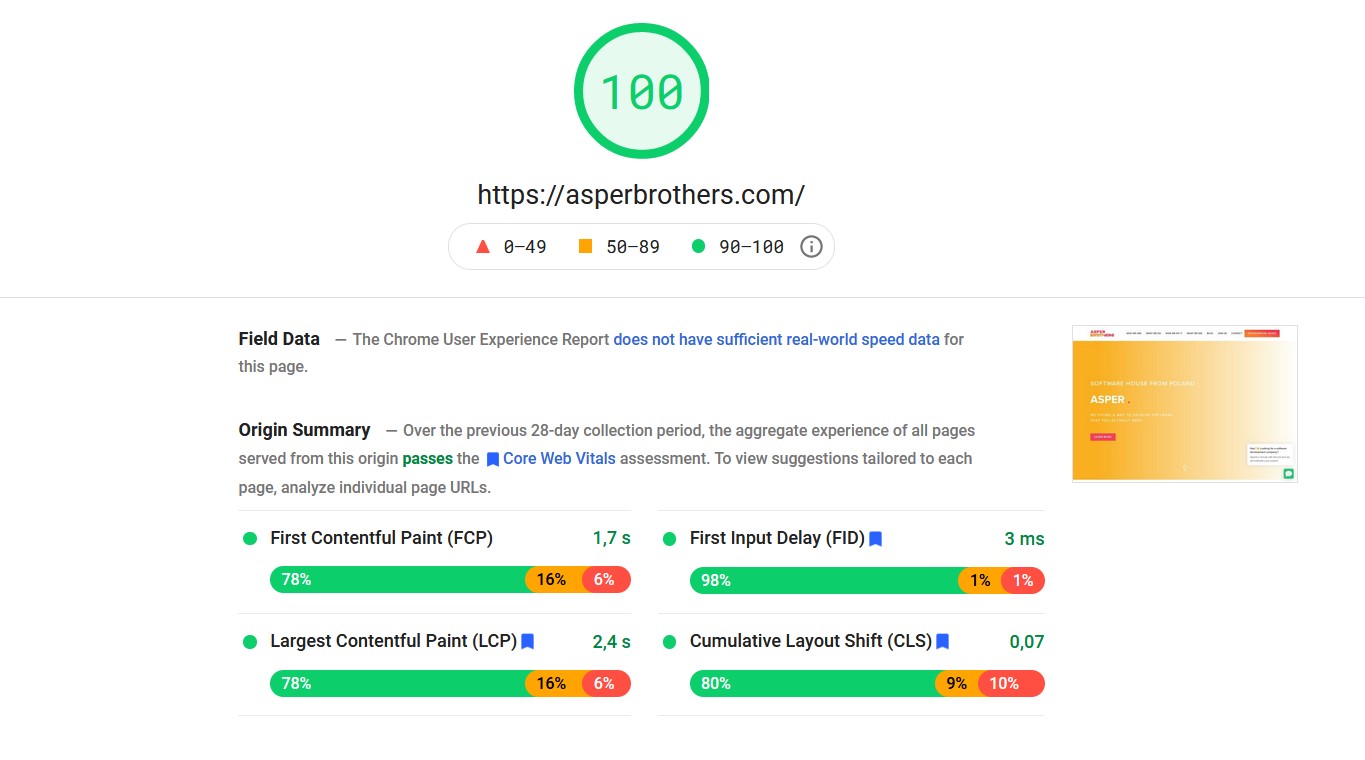

Scoring 100 on PageSpeed Insights is the goal of many SEO teams. However, you have to remember the real goal of technical optimization: the best possible user experience. That’s why, in addition to metrics, it makes sense to study user behavior on the site.

Ranking high on SERPs is not rocket science, but it requires smart and diligent work. Previously, website owners could trick Google’s algorithm by merely stuffing keywords and backlinking from micro-sites. Well, those days are over. If you want to be at the top, you’ve got to refine your SEO strategy.

A technical SEO audit is pretty much using the eyes of Google to look through your website. It’s a digital health check that lets you analyze your website’s ranking ability. Think of it as a process of checking the technical aspect of your website’s SEO.

Before ranking is done, search engine bots crawl the internet to find websites and web pages. These web pages will be checked for various ranking factors. Google algorithm uses more than 200 ranking signals. Backlinko has pretty much covered the updated Google ranking factors. Whichever industry you are in, your competitors are keeping up with the latest SEO changes — and that’s why you should too.

To keep up with these changes, you’ve got to monitor the health of your website – and that’s where a technical SEO audit comes into play. You can perform a technical SEO audit on your website using tools like Moz, SEMrush, Screaming Frog, Google Search Console, ahrefs etc. However, even good tools have their limitations. First of all, they often do not consider the specifics of your site and do not know why something has to stay and what can be dropped.

Additionally, tools often have limited access to your site and do not have all the data they need to see the big picture. That’s why the best SEO audit is one performed by a human.

Of course, a human uses many tools, too (we’ll write more about that later), but above all, an experienced engineer can consider the particular situation of a given site. A human will also directly answer your questions and concerns about particular issues.

As earlier stated, an SEO audit helps you to know your website’s health. This way, you will know what to improve on and pinpoint things dragging you behind. So here comes the next big question – why should you conduct an SEO audit regularly?

The SEO audits we provide are not only a way to better performance and results in Google. It’s also an opportunity to improve user experience. Our clients say the recommended changes increase the effectiveness of website goals. We believe that SEO should be looked at holistically as part of an overall business strategy. COO, ASPER BROTHERS Order SEO Audit

Google’s search algorithm keeps changing regularly, and you should change with it. If you want to dominate your industry, you should constantly lookout for what’s new in the digital space. To do that, you’ve got to continually perform a technical SEO audit and adapt to the changes.

Regularly auditing your website for technical factors is important to ensure your digital asset is aligned to Google’s best practices. Here are the areas that are worth analyzing in a technical SEO audit.

There are different types of SEO audits. Some focus more on the site’s content architecture, and others take into account internal and external linking. A technical SEO audit is different. It mainly helps you understand how Google’s robots see and index your site and how individual elements on the page affect crawl speed and user experience.

A good technical audit also includes assessing the technology used, languages, JS framework, and the level of external resources on the site. The more experienced and technical background the engineer performs, the more specific suggestions for recommended changes you will get.

You need to know that a good audit is not just an analysis of the situation, but also recommendations on what changes to make to improve performance results and user experience.

Websites are designed for users, not just for search engines, and Google moves towards optimizing the UX of sites favoring those that care about their users.

That’s why you should have your users in mind while designing a website, and these users include people who are not using a JavaScript- browser.

To test your website accessibility, you’ve got to preview it in a browser with the JavaScript turned off. Of course, you can also test it in a text-only browser like Lynx.

Disabling JavaScript allows you to see what content is generated on the page without using JS. Gif Source: https://stackoverflow.com/

A text-only browser helps you determine the accessibility of a site and helps you identify other content like the text embedded in images.

Indexing is the process of organizing the information on a web page and storing them in the search engine database. This helps search engines immediately respond to the queries from the users. For example, Google uses the XML sitemap to pinpoint what’s important on a website even though web pages not on your sitemap will be indexed by Google.

“The Google Search index contains hundreds of billions of webpages and is well over 100,000,000 gigabytes in size. It’s like the index in the back of a book — with an entry for every word seen on every webpage we index. When we index a webpage, we add it to the entries for all of the words it contains.”

Source – Google.com

It is essential to have an XML sitemap for your website so that Search Engine spiders can index all your major web pages effectively. However, all in all, it would be best if you focus on the overall site quality and not just on pages reported in the sitemap.xml.

The need to meet Core Web Vitals standards is one of the most common reasons for initiating technical audits.

Core Web Vitals are now on everyone’s lips. Google started to include this set of parameters as a ranking factor. It even gave developers better insight into the status of this metric through changes in Search Console, where there are now “Page Experience” and “Core Web Vitals” tools.

But what are Core Web Vitals? Simply put, according to Google, they are 3 important metrics that allow you to measure how well your website is optimized for user experience. They are Largest Contentful Paint (LCP), First Input Delay (FID) and Cumulative Layout Shift (CLS). Let’s briefly review each of these.

There are often questions about how Core Web Vitals differs from PageSpeed results. The PageSpeed Insights score is a number from 0 to 100 that measures your site’s overall performance and loading speed. Additionally, Google in this tool gives many tips on how to optimize this performance.

Core Web Vitals focus primarily on the impact of performance on user experience. They show lab data and real data from users, so we can realistically assess the impact of site optimization on the end-user experience. And after all, that’s ultimately what we optimize a site for – to make it more accessible and friendly on as many devices and for as many users as possible.

It is important to know how fast your website or web page loads. If your website takes more than 5 seconds to load, you might be losing a large volume of your audiences. According to Portent, 0-4 seconds of load time sites are ideal for conversion.

The conversion rates of your website drop by 4.42% with each additional second of load time.

Canonical URLs signal to the search engines that prevent duplicate content by integrating indexing and linking properties to a single URL. This is especially important if you have content on your site at different URLs that can cannibalize each other. By setting a canonical URL, you show Google which URL you think is more important for a given content and keyword.

Tools like Screaming frog SEO spider gives a quick overview of the canonical implementation across your website and reports the common error.

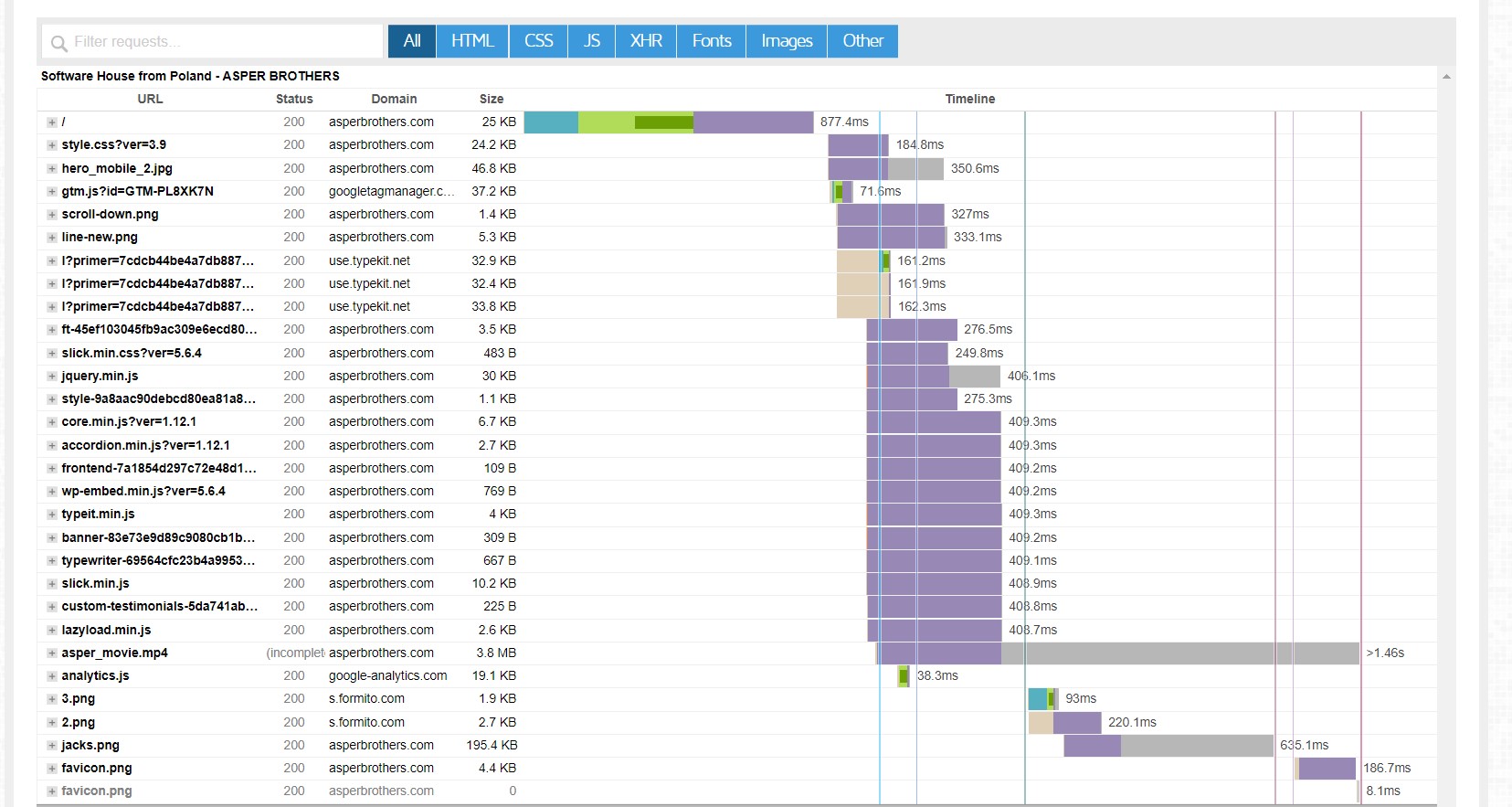

You can have the best-optimized site, give pure HTML on the page without any JavaScript (although that’s hardly possible today, don’t use JS), but just plug in too many external resources, and your site performance will fly down.

Let me give you an example. Hotjar, you are familiar with it, right? It’s a great tool, but it’s not irrelevant to your site. It’s an example of external JavaScript that needs to download to the user’s browser, affecting performance. Another example – a chatbot. This is also a great tool that helps you achieve better conversions. But also, to make it work, it often needs to load JavaScript that is fetched from an external server.

Another popular example is ads. Ad providers try to have fast ad servers, but all the time, displaying an ad requires downloading elements from outside.

What is the solution to this? Using external tools, be it SEO link building tools or SEO audit tools, wisely, limiting them or enabling them only when you actually use them. Sometimes it is also better to write something custom than download a whole big JS library that will heavily load the page.

In order to identify the loaded external resources and their influence on the page loading, it is worth using the waterfall view. You can get it in Chrome Dev Tools, but also e.g. on gtmetrix.com

When it comes to optimizing images – several things matter. First of all, size matters here. It makes no sense to load huge images that are scaled down to smaller sizes. It’s better to have the sizes cropped to the needs of a particular section. It will also help if you use the “srcset” tag, which will display the right image to fit your device – different for a phone and different for a desktop.

The second thing is the compression ratio. It’s best if you upload images that are already properly compressed. If you don’t have such a possibility – you can use the functions of your CMS or implement solutions that will do it on the server side.

Another thing is to use a CDN. For example, Cloudflare may be a good option if your website has a lot of graphic files. This will ensure that graphic resources are loaded from servers closer to the user’s location.

Lazy Loading is a very clever solution. Simply put, Lazy Loading allows you to load elements as you scroll down the page. Elements (for example, photos) can be loaded while the page is being used, making the first loading of the page much faster.

Lazy Loading can be used for many elements – images, whole sections, videos, illustrations, etc.

It can be implemented natively (many browsers already understand lazy tags) and dedicated libraries that support it. This is one of the fastest and most significant ways to optimize page load time.

Chrome up to version 76 supports native lazy loading.

Sometimes the requested URL goes through multiple redirects before the destination URL is served. This occurrence is commonly referred to as the redirect chain. Your web page may get into problems like delayed crawling, decreased pages speed, and loss in link equity with too many redirects.

It is essential to audit your website to see if your web pages redirect through the chain of redirects.

As you probably know – CSS is responsible for styling (appearance) of elements on your page, and JavaScript provides interactivity and some functionality. This is, of course, a big simplification. Practically every website today uses these two types of files. And the rule is simple – the smaller these files are, the better for load times.

There are many ways to optimize CSS and JS. Compression is the easiest way. But the most important thing is to make sure that there is only what is really necessary for these files. You can load different resources on each subpage. When, for example, functionality is only on the main page, the JS file responsible for it does not need to be loaded on other subpages. Your frontend development team should consider this in the project structure.

Additionally, it is sometimes a good idea to load CSS inline within the page code rather than as a separate file. This is especially recommended for key page elements such as menus or header sections. Thanks to this, in many cases, the browser will render your page faster.

It is vital to enable caching of JavaScript and CSS files. When the cache is not enabled, the user’s browser has to make multiple requests for the asset making the site load slow.

Having an SSL certificate is crucial to increase your SEO effort. An SSL helps to maintain a secure connection from a web server to the browser.

SSL implementation is one of the ranking signals for Google, and not having one can put a question mark on your website’s authority.

Let’s Encrypt is a clear winner when it comes to SSL usage statistics. As of May 2019, the authority supported over 169 million fully-qualified active domains. What’s more, it’s responsible for 98,072 million active certificates and over 51 million active registered domains.

source: hostingtribunal.com

Structured data is a set of markup implemented on a web page to provide accurate additional details in the web page.

The structured data or the schema markup is a set of code provided by Schema.org. This helps Google and other search engines to return information to the users more accurately.

Redirects send human visitors and search engine bots to a different URL from the one they requested originally.

The fewer redirects, the better because Google’s robot will have less work to do. Of course, some redirects are advisable (e.g. 301 redirects from a version with “www” to one without “www”). But the general rule is to do it wisely.

With Google’s “mobile-first” approach, it is now even more crucial to have responsive and mobile-friendly websites.

You need to perform a usability audit to understand how your website is performing on a particular device. Mobile-Friendly-Test is a free tool that determines how mobile-friendly your website or a web page is.

The robots.txt file tells search engine crawlers which page or file to crawl and which not to.

The website audit tool gives you a clear idea of how your web pages are being crawled and how good your robots.txt file is implemented.

SEO is such a big industry today that there are many useful tools on the market to support SEO auditing. However, specialized technical audit (especially in the JS area) is such a specific activity that tools should be treated only as a means to achieve the goal. All of the items listed below are supportive and help diagnose areas worthy of attention and deeper analysis. Personally, I find Chrome Dev Tools to be the most helpful of all these tools. In the right hands, Dev Tools will identify most technical issues with a website.

Chrome Dev Tools is a potent tool built-in Google Chrome browser. I’m not going to describe all the features here, but I will list those worth using initially. For technical analysis, it’s often a good idea to enable the option to view the page as a GoogleBot. Another useful feature is to disable JavaScript to see what content is generated without JS. Google does render JS, but in my opinion, it’s best when key content elements also work without JS. Additionally, you can simulate the page load time on different devices and different network speeds. Very useful.

Another useful feature is the analysis of loaded resources in the “Network” tab. This is where we can discover the largest loaded elements of the page, such as CSS and js files or external resources that increase the page load time. Handy is a division into types, filtering, sorting by types. The “capture screenshots” function also allows you to observe the progress of page rendering. It is worth associating this item with More Tools > Rendering. After enabling this, we have access to highlighting Layout Shift Regions or analyzing all Core Web Vitals. Anyway, I recommend that you familiarize yourself with all the features available in the “more tools” tab. Often people overlook these features, and they really are beneficial.

Dev Tools is something that many web developers work with daily. Therefore, it seems to be good to engage someone with developer experience to do performance and technical analysis. Surely the audit will go more smoothly for such a person.

Pagespeed Insights gives you an overall insight into your website speed. By merely inputting your URL, Google page insight will analyze your web content and recommend boosting your page load speed.

Nowadays, page load speed is one of the factors that determine your ranking in the SERP. If it takes time for your page to load, you will likely experience a high bounce rate. And if visitors do not interact with your website before leaving, you will pretty much have poor conversion performance and poor user experience.

One fascinating feature of the Page Speed Insight is the use of field data. That is, data is captured whenever visitors land on your website. The data reflect the user experience of the visitor, and it also offers actionable steps to help you optimize your website for better performance.

Lighthouse helps improve your webpage quality. It helps web owners determine audit functionalities like performance, SEO, accessibility, and progressive web apps.

To use Lighthouse, all you need is to input the URL of the website. Then the audit begins and generates a comprehensive report on the web page.

From there, you get to see how to improve the web page.

LightHouse is a great way to learn about a few key metrics that evaluate not only performance but also user experience.

One way to get ahead in the digital marketing world is to keep a close eye on your performance in SERPs — and the Google search console helps you to do just that.

This technical SEO audit tool helps you to diagnose common SEO issues. You can also use the tool to ascertain that Google’s web crawlers can access your website and that new pages are indexed at the right time.

You can optimize the setting to receive alerts whenever there are indexing issues on your website.

What’s more, the Google search console allows marketers to track their keyword ranking positions on search engine result pages.

Screaming Frog is one of the giants in the industry, and the company’s SEO spider can rapidly crawl websites of any size. It also delivers SEO recommendations to the users.

It can pinpoint web pages with missing or poorly optimized metadata, and broken links, diagnose and fix redirect chain issues, and uncover duplicate pages.

If you manage a team, then DeepCrawl is probably the best fit for you. It’s an enterprise-level technical SEO audit tool that delivers customized reporting.

With DeepCrawl, you can test XML sitemaps, find broken links, monitor page performance and speed, and ascertain content quality. One excellent feature is the historical data view. The feature lets you compare results and identify what needs to be fixed.

A technical SEO audit is good to do after every major site update and implementation of new features. And it is certainly necessary when launching a new site. But how to assemble the right team to run the audit?

The ideal team for a technical SEO audit service should include:

After the audit, it is certainly good to determine how the changes will be implemented on the website. Of course, it all depends on whether the site owners have their own team of developers or whether it is necessary to hire a dedicated team of developers.

A technical SEO audit can have different levels of sophistication and different levels of detail. One thing is certain. In the changing world of search engine requirements, it makes sense to stay on top of your own site’s optimization and performance levels. It’s worth getting started. Even small changes to your site often pay huge dividends in improving your position in Google.

Are PWAs the new sexy of mobile tech? Yes, sir! Why? Well, for several reasons: in general, users are almost three...

As the most popular type of content currently among internet users worldwide, videos are a great way to engage our audience...

In the following article, we will discuss what cross-platform development is, what benefits it brings, and what frameworks you can...