PWA vs AMP – What is faster and better for SEO - Comparison

When you look around a subway station during rush hours, what do you see? Likely, a crowd of commuting people keeping...

To make sure search engines prioritize scanning and indexing the most important pages on your website, optimizing your crawl budget is crucial. Neglecting crawl budget optimization can reduce your website’s visibility in search engine result pages and thus negatively affect its overall SEO performance.

In this article, we’ll talk about the importance of the crawl budget and explain how to manage and optimize it effectively. You’ll learn how to check how many pages on your website get scanned and which are the ones you should prioritize.

Let’s dive in and learn how to optimize both your crawl budget and crawl rate for better SEO performance.

Whenever a search engine spider scans a site, it looks at various features such as site speed, server errors, crawl budget, and internal linking. Based on these factors, the search engine decides how many pages of the website will get scanned.

The crawl budget, then, is the limit that search engines set on the number of pages they will scan on a website. For example, a website with 10,000 pages and frequent content updates may be scanned daily but it’s still limited by the spider’s time and resources. Supposing that Google crawls 500 of your pages per day, it would take 20 days to scan the entire website. However, if Google’s crawl budget for that site is 1000 pages per day, it would take half the time. Poor-quality content or broken links can slow down the crawling process and may require assistance.

By optimizing your crawl budget you enhance your crawl rate – the rate at which search engines scan your site. This way you’ll increase your chances of ranking higher in Google’s search results.

Crawl budget optimization is a critical aspect of SEO that can significantly improve your website’s visibility and its incoming organic traffic. It involves fine-tuning the website to ensure that search engine crawlers can access and index the site’s most important pages efficiently.

A recent study by OnCrawl found that optimizing your crawl budget can lead to a significant boost in key SEO metrics. The study revealed that proper crawl budget optimization results in an average increase of 30% in the number of pages crawled, a 15% increase in organic traffic, and a 10% increase in the number of indexed pages. By analyzing crawl stats and optimizing crawl budgets effectively, website owners and SEO professionals can achieve better search engine visibility and drive more traffic to their websites as a result.

Proper crawl budget optimization can result in a significant increase in the number of pages crawled, indexed pages, and organic traffic. We found that improving site speed, internal linking, creating an XML sitemap, monitoring server errors, and using canonical tags helps optimize our clients’ crawl budgets. Increasing the number of pages that search engines crawl leads to indexing more content and improved rankings, resulting in more organic traffic. CEO, ASPER BROTHERS Let's Talk

Naturally, in order to start optimizing your crawl budget, you’ll need to know how much resources Google and other search engines have allocated for your website in the first place. Here are a few ways you can find that out:

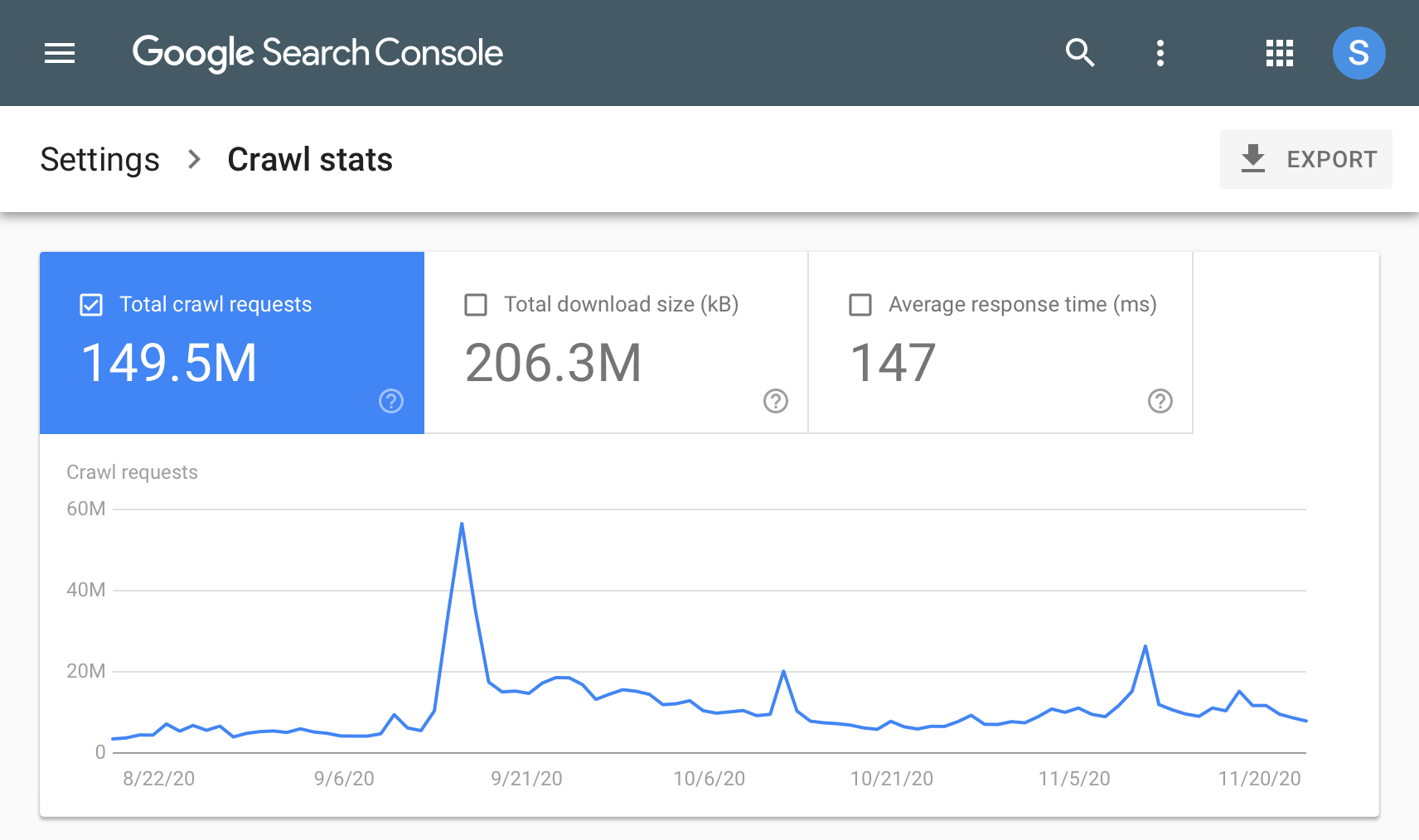

Google Search Console is a free tool that provides valuable insights into how Google indexes your site. You can access the “Coverage” report to see the number of pages crawled and check for any errors that could be affecting your site’s crawl budget. Within “Settings” you can also find a dedicated “Crawls stats” panel.

This is what Google Search Console’s “Crawl stats” panel looks like.

Log files provide detailed information on how search engine crawlers access your site, including the addresses of pages scanned and scanning frequency. By keeping an eye on your log files, you can easily identify any errors and issues that may hinder your crawl budget.

There are a few universal, sure-fire ways of keeping your crawl budget in check. Implementing the practices outlined below can help you enhance your crawl rate and boost your website’s visibility in search engine result pages.

Search engine crawlers use the robots.txt file to determine which pages of a website they should scan. Making sure that your website’s backend resources are excluded from being scanned in robots.txt can help your crawl budget. For example, if there’s an entire “admin” recources on your website, you can include the following line in your robots.txt file:

User-agent:* Disallow: /admin/The sitemap is a file that lists all the important pages on your website. It helps search engine spiders understand your site’s structure and determine which pages are the most important ones. By updating your sitemap you can make sure that search engine crawlers are aware of any changes made to your website, including new or updated pages.

A visual representation of a sitemap. Source: venngage.com

In order to optimize your crawl budget and prevent Google from scanning the same content multiple times, you must make sure that there are no duplicate pages on your website. Duplicate pages confuse search engine crawlers, dilute your traffic, and waste your designated crawl budget. In order to inform search engine spiders which URL to scan and index, you can either remove duplicate content or, if multiple URLs refer to the same page, employ canonical tags. You can find more information on how to detect duplicate pages in our Technical SEO Checklist.

Another factor that impacts the crawl budget is the website’s load time. Because slow site speed can affect your search engine rankings, your crawl budget also gets reduced. There are several ways to improve load time, such as compressing images, minifying CSS and JavaScript, and introducing caching and lazy loading. Reducing load times can significantly enhance both your website’s crawl budget and its overall SEO performance.

Another essential practice to consider when optimizing your crawl budget is to avoid redirect chains and orphan pages. Redirect chains and orphan pages can confuse search engine crawlers and waste valuable crawl budget resources. Therefore, it is crucial to ensure that proper internal linking is in place and that the number of redirects on your website is kept at a minimum. Instead of redirecting to a page, you should always use a direct link to the final destination. This will save the search engine spider’s time, further optimizing your crawl budget.

Broken links are bad for your crawl budget because they can lead search engine crawlers to dead ends, wasting valuable time and resources. Tools like Google Search Console can help identify broken links, which can then be fixed or removed.

HTML pages are easier for search engine spiders to crawl and index than other page types. This is why converting all pages to HTML can also help you ensure that your content is easily accessible to search engine crawlers.

Adding parameters to your URLs can give more details about your page content but it also makes it harder for search engine crawlers to index your website. Using static URLs, such as example.com/page/123, instead of dynamic ones like example.com/page.id=123 is always a good practice. As a result, your website will be more accessible to search engine crawlers.

Hreflang tags are useful in helping search engine crawlers display the correct version of a page to your users based on their preferred language or location. This can improve your website’s visibility in SERPs for users in various regions.

If your website offers multiple language versions, it’s recommended to use hreflang tags to indicate which one to show to a specific user. Doing so can enhance your crawl budget and attract more traffic to your website.

Proper content architecture assures that your content distributes information efficiently and effectively in a way that meets the requirements of your intended audience. There is an increasing demand for well-structured and meaningful content as users seek information that is easily accessible and comprehensible.

Another important practice to keep in mind is internal linking. Internal linking benefits both users and search engines, as it improves the discoverability of your pages. Those that are not linked anywhere else within your content become more difficult to find and are less likely to be crawled regularly. Moreover, internal linking can also result in better rankings for terms related to linked pages.

As a website owner, it’s important to increase your crawl limit even when there’s high crawl demand. The more pages that search engines crawl, the higher the chance of indexing more content, leading to improved rankings and more organic traffic.

To optimize a site’s crawl budget, there are several methods available. Improving site speed is crucial to having a higher crawl budget. This can be achieved through compressing images, streamlining code, and enabling caching.

Internal linking can help search engine crawlers traverse a site and find important pages. It’s essential to include internal links on every page in order to guide spiders to other relevant sections. Creating an XML sitemap provides a roadmap for search engine crawlers when they crawl a website. Submitting such an XML sitemap to Google can help improve the site’s crawl budget.

Server errors can prevent search engine crawlers from accessing a website. As such, server logs should be monitored and errors fixed promptly. Duplicate content can confuse search engine crawlers and waste the crawl budget. This is why canonical tags should always be used to indicate the preferred URL for search engine crawlers to crawl and index.

If you want to make sure that your SEO meets all the above criteria, we recommend looking into our technical SEO audit services.

Optimizing a website’s crawl budget is crucial for successful search engine optimization (SEO). Crawl budget refers to the resources and time spent by search engines to crawl and index a website. The more pages that search engines crawl, the higher the chance of indexing more site content, which can lead to increased rankings and higher organic traffic.

When you look around a subway station during rush hours, what do you see? Likely, a crowd of commuting people keeping...

Since the rollout of Google’s Core Web Vitals, webmasters have been focused on creating a stellar User Experience (UX). And...

As the most popular type of content currently among internet users worldwide, videos are a great way to engage our audience...